Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More

If you haven’t heard of “Qwen2” it’s understandable, but that should all change starting today with a surprising new release taking the crown from all others when it comes to a very important subject in software development, engineering, and STEM fields the world over: math.

What is Qwen2?

With so many new AI models emerging from startups and tech companies, it can be hard even for those paying close attention to the space to keep up.

Qwen2 is an open-source large language model (LLM) rival to OpenAI’s GPTs, Meta’s Llamas, and Anthropic’s Claude family, but fielded by Alibaba Cloud, the cloud storage division of the Chinese e-commerce giant Alibaba.

Alibaba Cloud began releasing its own LLMs under the sub brand name “Tongyi Qianwen” or Qwen, for short, in August 2023, including open-source models Qwen-7B, Qwen-72B and Qwen-1.8B, with 72 billion and 1.8-billion parameters respectively (referencing the settings and ultimately, intelligence of each model), followed by multimodal variants including Qwen-Audio and Qwen-VL (for vision inputs), and finally Qwen2 back in early June 2024 with five variants: 0.5B, 1.5B, 7B, 14B, and 72B. Altogether, Alibaba has released more than 100 AI models of different sizes and functions in the Qwen family in this time.

And customers, particularly in China, have taken note, with more than 90,000 enterprises reported to have adopted Qwen models in their operations in the first year of availability.

While many of these models boasted state-of-the-art or close-to-it performance upon their release dates, the LLM and AI model race more broadly moves so fast around the world, they were quickly eclipsed in performance by other open and closed source rivals. Until now.

What is Qwen2-Math?

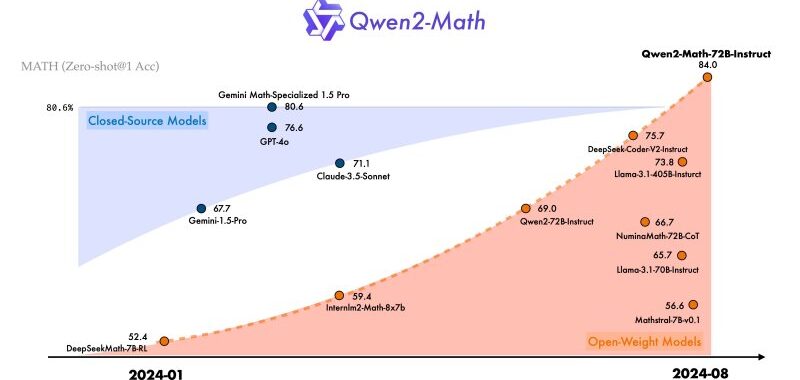

Today, Alibaba Cloud’s Qwen team peeled off the wrapper on Qwen2-Math, a new “series of math-specific large language models” designed for English language. The most powerful of these outperform all others in the world — including the vaunted OpenAI GPT-4o, Anthropic Claude 3.5 Sonnet, and even Google’s Math-Gemini Specialized 1.5 Pro.

Specifically, the 72-billion parameter Qwen2-Math-72B-Instruct variant clocks in at 84% on the MATH Benchmark for LLMs, which provides 12,500 “challenging competition mathematics problems,” and word problems at that, which can be notoriously difficult for LLMs to complete (see the test of which is larger: 9.9 or 9.11).

Here’s an example of a problem included in the MATH dataset:

Candidly, it’s not one I could answer on my own, and certainly not within seconds, but Qwen2-Math apparently can most of the time.

Perhaps unsurprisingly, then, Qwen2-Math-72B Instruct also excels and outperforms the competition at grade school math benchmark GSM8K (8,500 questions) at 96.7% and at collegiate-level math (College Math benchmark) at 47.8% as well.

Notably, however, Alibaba did not compare Microsoft’s new Orca-Math model released in February 2024 in its benchmark charts, and that 7-billion parameter model (a variant of Mistral-7B, itself a variant of Llama) comes up close to the Qwen2-Math-7B-Instruct model at 86.81% for Orca-Math vs. 89.9% for Qwen-2-Math-7B-Instruct.

Yet even the smallest version of Qwen2-Math, the 1.5 billion parameter version, performs admirably and close to the model more than 4 times its size scoring at 84.2% on GSM8Kand 44.2% on college math.

What are math AI models good for?

While initial usage of LLMs has focused on their utility in chatbots and in the case of enterprises, for answering employee or customer questions or drafting documents and parsing information more quickly, math-focused LLMs seek to provide more reliable tools for those looking to regularly solve equations and work with numbers.

Ironically given all code is based on mathematic fundamentals, LLMs have so far not been as reliable as previous eras of AI or machine learning, or even older software, at solving math problems.

The Alibaba researchers behind Qwen2-Math state that they “hope that Qwen2-Math can contribute to the community for solving complex mathematical problems.”

The custom licensing terms for enterprises and individuals seeking to use Qwen2-Math fall short of purely open source, requiring that any commercial usage with more than 100 million monthly active users obtain an additional permission and license from the creators. But this is still an extremely permissive upper limit and would allow for many startups, SMBs, and even some large enterprises to use Qwen-2 Math commercially (to make them money) for free, essentially.

Source link