Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More

Nous Research, the AI research group dedicated to creating “personalized, unrestricted” AI models as an alternative to more buttoned up corporate outfits such as OpenAI, Anthropic, Google, Meta, and others, has previously released several open source models in its Hermes family, and new, more efficient AI training methods.

But before today, if researchers and users wanted to actually deploy these models, they’d needed to download and run the code on their own machines — a time-consuming, finicky, and potential costly endeavor — or use them on partner websites.

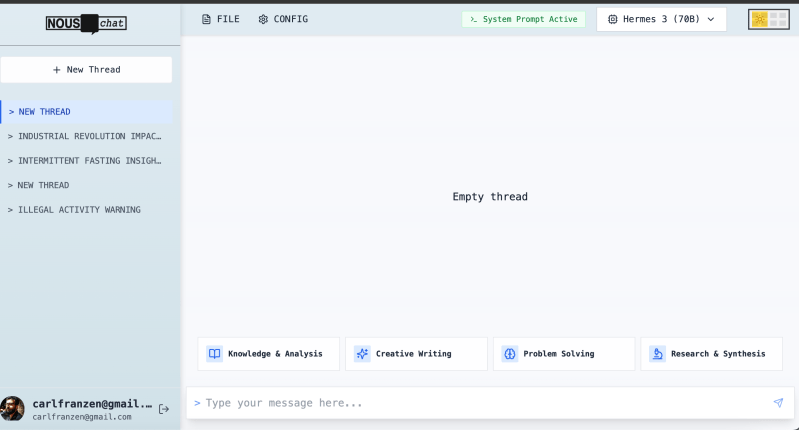

No longer: Nous just announced its first user-facing chatbot inference, Nous Chat, which gives users access to its large language model (LLM) Hermes 3-70B, a fine-tuned variant of Meta’s Llama 3.1, in the familiar format of ChatGPT, Hugging Chat, and other popular AI chatbot tools — with a text entry box at the bottom for the user to type in text prompts, and a large space for the chatbot to return outputs up top. As Nous wrote in a post on the social network X:

“Since our first version of Hermes was released over a year ago, many people have asked for a place to experience it. Today we’re happy to announce Nous Chat, a new user interface to experience Hermes 3 70B and beyond.

https://hermes.nousresearch.com

We have reasoning enhancements, new models, and experimental capabilities planned for the future, and this will become the best place to experience Hermes and much more.”

Initial impressions of Nous Chat

Nous’s design language is right up my alley, using vintage fonts and characters evoking early PC terminals. It offers a dark and light mode the user can toggle between in the upper right hand corner.

Interestingly, like OpenAI eventually did with ChatGPT — and many other AI model providers as well — Nous Chat also offers suggested or example prompts at the bottom of the screen above the prompt entry textbox, including “Knowledge & Analysis,” “Creative Writing,” “Problem Solving,” and “Research & Synthesis.”

Clicking any of these will send a pre-written prompt to the underlying model through the chatbot, and have it respond, such as serving up a summary of research on “intermittent fasting.”

In my brief tests of the chatbot, it was speedy, serving up answers in single-digit seconds, and was able to produce links back to URLs on the web for sources it cited, though it seemed to hallucinate these as well, on occasion — and the chatbot itself claimed it could not access the web.

Despite its previously stated aims of enabling people to deploy and control their own AI models without content restrictions, Nous Chat itself actually does appear to have some guardrails set, including against making illegal narcotics such as methamphetamine.

In addition, the underlying Hermes 3-70B model specified to me that its knowledge cutoff date was April 2023, making it less useful to obtain current events, something that OpenAI is now competing directly on against Google and other startups such as Perplexity.

Already jailbroken

That hasn’t stopped infamous AI jailbreakers such as Pliny the Prompter (@elder_plinius on X) from already quickly cracking the chatbot and getting past the restrictions.

While lacking many of the advanced features of other leading chatbots such as file attachments, image analysis and generation, and interactive code display canvases or trays, Nous Chat is unlikely to replace these rivals for many business users.

But as an experiment it’s certainly interesting and worth playing around with, in my opinion, and if Nous does add more features to it, it could make for a compelling alternative — especially if content restrictions are lifted per Nous’s stated aims.

I’ve reached out to the group for more information on Nous Chat and its approach to guardrails/content restrictions, and I will update when I hear back.

Source link